- #AMORPHOUSDISKMARK SOFTWARE#

- #AMORPHOUSDISKMARK CODE#

- #AMORPHOUSDISKMARK FREE#

- #AMORPHOUSDISKMARK MAC#

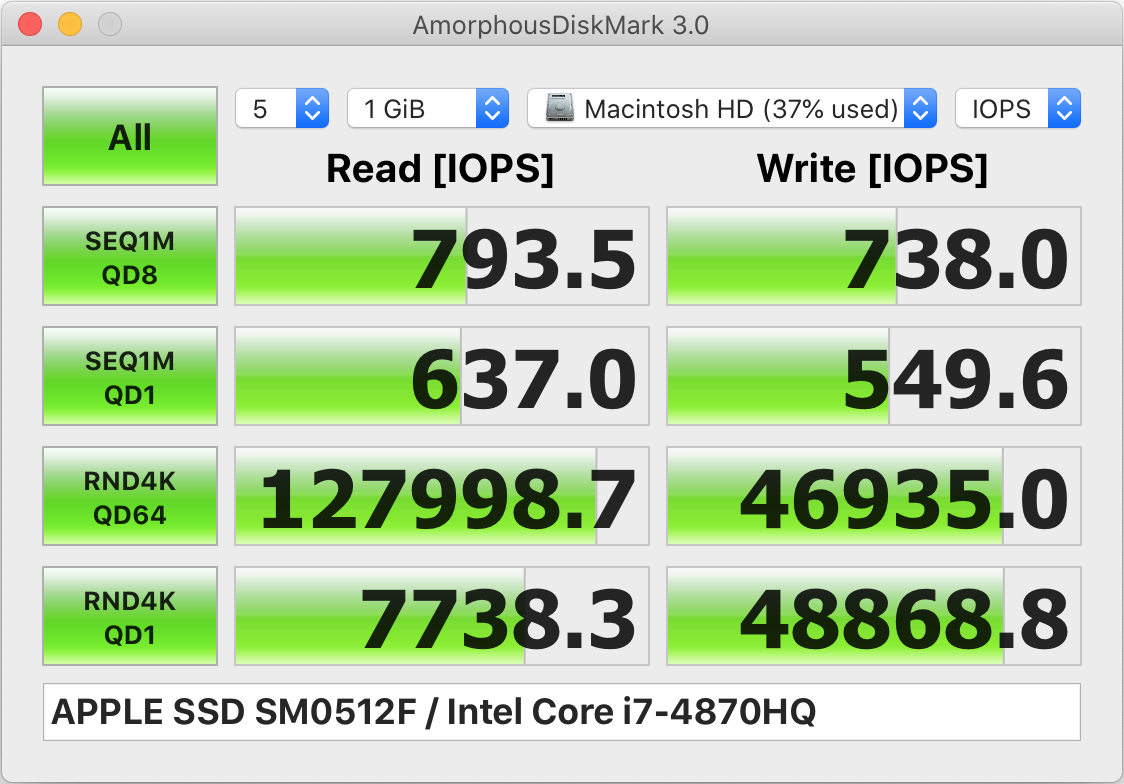

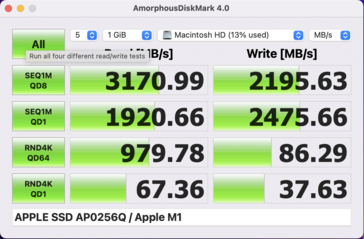

Although taking the median of five results may appear a wise statistical precaution, as the user is given no idea of the spread of results it’s easy to see how misleading that can be. It’s most commonly used in its default configuration, with 5 test iterations of 1 GiB, for which it “shows the median score”. For the most widely quoted figure of “SEQ1M QD8”, it’s described as “reading/writing the specified size file sequentially with 128 KiB blocks from the specified number of threads (queue depth).” This makes its results subjective and susceptible to user interpretation.ĪmorphousDiskMark is backed up by more information, for instance that its default sequential read/write queue depth is 8, test iterations are 5, test size is 1 GiB, test interval of 5 s, and a test duration limit of 5 s. Its analogue speedometer display is fun, but constantly changes during testing, leaving you guessing what the true transfer rates were.

#AMORPHOUSDISKMARK FREE#

It might be an outright lie (some people do say strange things online), a simple mistake for what should have been 2.4 GB/s, or an unreliable test because of any of the factors above and below.īy far the most popular benchmarks used in macOS are those generously provided free by Blackmagic and Amorphous.īlackmagic Disk Speed Test is primarily intended to help users of Blackmagic products determine whether their storage is capable of the write and read performance required to handle different types of video. Does that mean that those 99 reports were in error? Statistically, that singular result is an outlier. Suppose we see 99 reports of a particular SSD clocking up a write speed of 2.8 GB/s, and a single claim of 3.4 GB/s. This is a trap we all fall into at times. What you can’t do is use individual data taken from the population to make the sort of comparisons you’d make in controlled experiments, because too many of those variables are uncontrolled.

#AMORPHOUSDISKMARK MAC#

caching/buffering in memory, both on the Mac and in the storage.availability of SLC Write cache on SSDs.variation in negotiated bus/port speeds.

#AMORPHOUSDISKMARK SOFTWARE#

other software running which may access the disk during testing.different versions of macOS, kexts, firmware, etc.combinations of hardware, including case/enclosure, cable, host Mac.

In principle, all these tests should be deterministic, so with negligible noise or error, but in practice there are a great many other factors which can come into play, including: Having decided what to benchmark, we then need to get its best estimate, either with small dispersion or known variance. And if the test doesn’t explain exactly what it does, we simply can’t trust what it’s doing.

#AMORPHOUSDISKMARK CODE#

So any benchmark which runs crafted code calling low down functions in C doesn’t tell me as much as that using standard FileHandle calls from Swift or Objective-C. That’s important, because some benchmarks use quite different code from that normally used by apps, and features in storage can also be tuned to deliver better benchmark results even though in real use they’re slower. What I want to know, though, is how fast storage will be when in use, typically doing mundane tasks like reading and saving files, and when copying in the Finder. For those in the trade, it’s to show how fast their product is compared with their competitors. For some, it’s to prove that their purchase choice performs better than those of others. We run benchmark tests for different reasons. This article explains some of the difficulties in interpreting this avalanche of data, and how we can move forward. Over the last couple of weeks, I’ve read more benchmarks and other performance measurements on SSDs than I’ve ever seen before, thanks to so many of you who have contributed results from your own tests.

0 kommentar(er)

0 kommentar(er)